AI Outperforms Human Radiologists and Pathologists

AI is now demonstrably more accurate, faster and cheaper than human radiologists. It’s not perfect — it has an error rate — but that rate is significantly lower than that of human radiologists. We still need humans to check over the results generated by AI, but we need only a small fraction of the radiologists now employed. As you can imagine, these physicians, despite the huge advantages of AI to patients, are fighting this tooth and nail. Next, after radiologists, the need for pathologists will be significantly reduced. We’ll need some, again, to confirm the results generated by AI. During this transition, the powers that be will do everything they can to slow the process down.

The human eye and brain are subject to fatigue. Training biases them. Expertise sharpens some edges and blunts others. AI never tires. It is capable of sifting through millions of pixels and billions of data points to see what no physician can. And every day AI benefits from retraining, from the feedback loop as it is fed the results of its diagnosis, accurate and inaccurate. Every day its reliability improves a little; every day its advantage over humans widens.

As Dr. Curtis Langlotz at Stanford University explains, artificial intelligence is changing everything in diagnosis.

“I remember the exact moment I realized AI would change everything,” Langlotz recalls. “I was reviewing chest X-rays late one evening when our experimental AI system flagged a subtle nodule I had missed.”

Few cancers illustrate the stakes more starkly than lung cancer.

Lung cancer remains the leading cause of cancer death worldwide, in large part because it is usually diagnosed too late. Small nodules on CT scans may or may not be malignant. Radiologists often must decide whether to dismiss, monitor, or biopsy — and their error rate costs lives.

At Google Health, Dr. Shravya Shetty and her team took on this challenge. Working with the National Cancer Institute and major medical centers, they developed an AI system that analyzes not just individual CT slices but the entire three-dimensional structure of the lungs. The system also tracks changes over time, learning the subtle textures and growth patterns invisible to the human eye.

Dr. Michael Gould, a pulmonologist at Kaiser Permanente who collaborated on the project, was astonished:

“The AI system could predict which small nodules would become cancerous with 94% accuracy. That’s better than most experienced thoracic radiologists.”

The bigger story came when the model was put into practice. At Intermountain Healthcare, thoracic surgeon Dr. Thomas Varghese oversaw deployment across the network. “We went from missing 20% of early-stage cancers to missing less than 5%,” he reported. “That translates to hundreds of lives saved each year in our system alone.”

Case studies from the front lines

Across the country, AI is proving itself but is not being adopted as standard practice:

Chicago, lung cancer screening : Radiologists reviewing low-dose CT scans used an AI system that automatically ranked nodules by malignancy risk. The AI didn’t replace biopsy or follow-up imaging, but it made sure no suspicious lesion got lost in the shuffle.

These AI-based systems for lung nodule detection, characterization, triage, and malignancy risk have been validated in clinical trials. An example: clinical trial NCT07052773 (“Clinical Evaluation of the Lung Cancer AI-based Decision Support”). Nevertheless, they are not being used or incorporated into standard practice.

One company, Aidence, offers “Veye Lung Nodules,” a diagnosis tool used in some European hospitals but not adopted in the US.

AI-digital pathology to identify borderline prostate cancer cases: AI overlays helped pathologists resolve borderline prostate cancer cases. In multi-reader trials, accuracy improved, turnaround time dropped, and consistency rose. In this case, the digital pathology overlays are closer to real-world adoption in some labs, though not in most hospitals.

Paige Prostate received FDA authorization in 2021 and is in use in a few locations in the US. Use requires infrastructure (digital pathology scanners, slide digitization, image management systems). Many labs still rely on glass-slide-microscope workflows, which is a barrier to broad adoption. To the extent that investment in digital capability requires that radiologists submit budget requests to their hospitals, they are likely reluctant to do that since the technology replaces a substantial portion of their jobs.

NYU Langone launched a digital pathology program across its network.

Ibex Prostate Detect is being deployed in UTMB; Alverno Labs, a large Midwest network. These systems create heatmaps/alerts to aid routine reads and are being deployed in clinical workflows.

Bottom line: FDA approved radiology is being implemented in a few hospitals, including in New York, (e.g., NYU’s digital pathology infrastructure; Paige’s MSK roots; Mount Sinai’s AI pathology research and deployments) but is not being widely deployed, despite its clear advantages in accuracy, efficiency and cost. Said another way, these technologies replace most, though certainly not all, human radiologists and are thus not adopted.

Seeing beyond the human eye

The new AI-assisted tools are especially useful in pediatric oncology, where many doctors see only a handful of rare cancers in their entire careers. Dr. Kristen Yeom, a pediatric radiologist at Stanford, faced this challenge in diagnosing brain tumors in children.

With colleagues, she helped build an AI system that analyzed pediatric brain MRIs. At first, it simply confirmed what experts already knew. Then it began identifying features even experienced neuroradiologists had never recognized.

“The AI was picking up on subtle patterns in how water molecules moved through brain tissue,” Yeom recalled. “These were invisible to human eyes but turned out to be early indicators of tumor infiltration.”

At Boston Children’s Hospital, Dr. Tina Young Poussaint put the system into clinical use. “We reduced our diagnostic uncertainty by 60%,” she said. “For parents facing their child’s potential cancer diagnosis, that certainty is invaluable.”

The benefits extended to treatment planning. Dr. Michelle Monje, a pediatric neuro-oncologist at Stanford, used the AI to map tumor boundaries, enabling surgeons to remove malignant tissue while preserving healthy brain function. Dr. Samuel Cheshier, a pediatric neurosurgeon, described the change simply:

“AI has become our GPS for navigating the brain.”

Decoding the genome’s hidden language

Patterns aren’t confined to images. Cancer also hides its signals deep within the genome. In the early 2000s, Dr. Victor Velculescu at Johns Hopkins recalled feeling overwhelmed after sequencing cancer genomes.

“We had all this genetic data, but it was like having a library in a foreign language.”

At the Broad Institute, Dr. Gad Getz built AI algorithms that could read this hidden language. His lab trained models on tens of thousands of patient genomes to distinguish driver mutations from harmless passengers. Collaborating with Dr. Shamil Sunyaev at Brigham and Women’s, they pushed prediction accuracy for harmful variants to above 90% and reduced false positives up to 20% in some settings.

This marked a leap from studying individual genes to modeling entire networks of genetic interactions. What once took years of painstaking analysis now emerged in weeks, even days, giving oncologists insight into which mutations truly mattered.

CheXNet — the Stanford breakthrough

Stanford’s AI lab, led first by Dr. Andrew Ng and later Dr. Pranav Rajpurkar, developed one of the first landmark systems: CheXNet, a deep learning model that could identify 14 different pathologies in chest X-rays with accuracy on par with expert radiologists.

The real breakthrough was not only diagnostic accuracy — it was speed and consistency. It takes AI seconds to read a CT scan or MRI; it might take a radiologist five minutes or more. And, as Dr. Matthew Lungren, a radiologist and AI researcher at Stanford, explained:

“A radiologist might read 100 chest X-rays in a day and perform at 95% accuracy when fresh, but that accuracy drops with fatigue. AI never gets tired, never has a bad day, and can process thousands of images with unwavering precision.”

Radiology images are digital, high-volume, and standardized — ideal fuel for AI training. That has made radiology one of medicine’s earliest proving grounds for AI. And some say the first victim.

CHIEF — A universal cancer detector

If radiology was AI’s first proving ground, pathology may be its most ambitious. Pathologists spend hours analyzing tissue under microscopes — a process that is time-consuming and subjective. With the digitization of pathology slides, AI is transforming this field.

In 2025, researchers at Harvard Medical School introduced CHIEF (Cancer Histology Image Evaluation Foundation), trained on 44 terabytes of pathology data across millions of slides. The results were remarkable:

96% accuracy across 19 cancer types.

Ability to predict survival outcomes directly from pathology images.

Identification of genetic mutations without costly genetic testing.

Chamath Palihapitiya, a well-known venture capitalist, called it a paradigm shift, “Through more efficient evaluations, CHIEF could enable better diagnosis and treatment.” Dr. Pranav Rajpurkar, involved in its development, emphasized its robustness: CHIEF was validated on 32 datasets from 24 hospitals, overcoming one of the biggest challenges of AI — failing outside the lab where it was built.

Tissue slides hold more than meets the eye. AI can detect hidden patterns — clues to prognosis or mutations — that even the most experienced pathologists cannot see.

Large language models

AI’s promise extends beyond images. In 2024, a randomized clinical trial published in JAMA Network Open compared physicians’ diagnostic reasoning with and without access to a commercially available large language model (LLM).

On its own, the LLM scored a median of 92% per case — outperforming physicians.

Yet physicians paired with the LLM did not improve significantly compared to those working unaided. They scored 83%. Physicians alone scored 80%.

The paradox was striking: the AI alone outperformed both groups of humans, but simply giving it to doctors didn’t help. Researchers suggested two reasons:

Physicians were not trained in how to interact effectively with AI.

AI needs to be integrated into workflows. It won’t happen on its own. Producing a better answer then requires humans to pay attention and incorporate it into treatment regimens.

In addition to skill level — AI can see things that humans can’t, and AI doesn’t experience fatigue — another issue is human bias. Humans, protective of skills they’ve put years into developing, dismiss AI as unnecessary or error-prone despite evidence to the contrary. A study grounded in the Dunning–Kruger Effect found that individuals who overrate their own performance are less likely to rely on AI, hindering optimal collaboration. (Cornell study: Knowing About Knowing: An Illusion of Human Competence Can Hinder Appropriate Reliance on AI Systems).

There are also legal and ethical concerns that shape skepticism. For instance, when AI flags an abnormality missed by a radiologist, it could increase perceived liability or “AI penalty”, so professionals may unconsciously emphasize AI’s limitations to protect themselves. Medical malpractice suits may face more complex challenges with the rise of artificial intelligence, according to a study led by Brown researchers that suggested that radiologists are viewed as more culpable if they fail to find an abnormality detected by AI. (See: Use of AI complicates legal liabilities for radiologists, study finds. July 28, 2025). In other words, among radiologists and pathologists, AI is widely considered to be a threat. It is.

AI Is more predictive than humans by a wide margin

The advantage of AI is particularly profound when it comes to predicting months or years in advance which patients will get cancer.

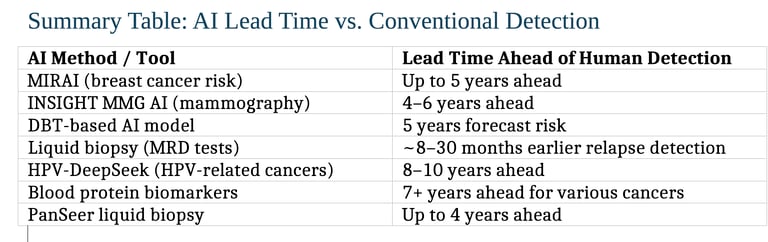

1. Mammography-Based AI (2–6 years ahead)

The MIRAI model predicts the risk of developing breast cancer up to five years in advance — demonstrating strong predictive accuracy and cross-population validation. Massachusetts General Hospital NAM

Another study using commercial AI algorithms (INSIGHT MMG) found that AI scores were significantly higher 4–6 years before clinical detection, with AUC values climbing over time — showing meaningful lead time for early intervention. JAMA Network News-Medical

2. Digital Breast Tomosynthesis (5-Year Forecast)

A deep learning model using digital breast tomosynthesis (DBT) forecasts an individual’s 5-year breast cancer risk. It is based on an AI system that analyzes ultrasound images with an accuracy rate of 80%, significantly higher than human pathaologists.

3. Liquid Biopsy and Circulating DNA (Months to Years ahead)

Technologies like Guardant’s Reveal, Natera’s Signatera, and others detect cancer relapse between ~8 months to over 28 months earlier than traditional imaging.

4. HPV-Related Head and Neck Cancers (Up to ~10 years ahead)

The HPV-DeepSeek test identifies HPV-associated head and neck cancers up to 8–10 years before symptoms or clinical diagnosis by detecting tumor DNA fragments via machine learning-enhanced blood testing.

5. Blood Protein Biomarkers (7+ Years ahead)

A study from the UK Biobank found that certain blood proteins correlated with a cancer diagnosis over seven years later, suggesting long-term early warning potential.

PanSeer, a noninvasive liquid biopsy, detected several cancer types in 95% of asymptomatic individuals who were later diagnosed — even up to four years before standard diagnosis. This extended lead time opens new possibilities for earlier intervention, targeted preventive screening, and meaningful improvements in patient outcomes.

Self-diagnosis with AI

Not all medical AI flows through hospitals. Increasingly, patients are using AI tools directly. Some stories are controversial, but others are lifesaving.

Lauren Bannon, dismissed with “acid reflux,” asked ChatGPT about her symptoms in 2025. It suggested Hashimoto’s thyroiditis, prompting further tests that revealed a neck tumor. “AI saved my life,” she said.

Marly Garnreiter had unexplained symptoms that stumped specialists until AI flagged Hodgkin lymphoma — confirmed months later.

Sam West used AI-driven statistics to identify a rare tumor variant six months before experts recognized it.

Robert Wright, after noticing a neck lump, turned to AI for guidance. It urged him to seek care, revealing throat cancer. Now cancer-free after immunotherapy, he hosts a podcast on AI in health.

These cases highlight both AI’s democratizing power and its risks. While AI can push patients toward timely care, it can also mislead, over-reassure, or spark unnecessary anxiety.

A note of caution: I am concerned that people with a life-threatening condition might conclude from my work that physicians and hospitals are best avoided. Don’t do that. It will likely end in disaster. Use AI to choose which hospital or clinic to go to for treatment, to prepare questions prior to doctor visits, and to consider, with your doctor, which treatments to avoid based on quality-of-life considerations as well as longevity. AI can be an invaluable, life-saving tool, but doctors and hospitals are crucial to your recovery.

Conclusion

Across radiology, pathology, diagnostic reasoning, and even patient self-advocacy, AI is establishing itself as better at diagnosis than traditional radiology. Traditional diagnostics still have a role to play, but a diminished one. As patients, we don’t want to accept an AI-generated diagnosis and proceed with surgery, for instance, without a human expert reviewing the AI’s conclusion.

In radiology, tools like CheXNet read images quickly and consistently.

In pathology, CHIEF shows the potential of universal multi-cancer detection.

In clinical reasoning, LLMs show the potential for enormous contributions to patient survival. They are not being widely used.

For patients, AI empowers — but has major limitations. As patients, we should not conclude that physicians no longer have a role to play. They do. An important role.

Though many of these new technologies have been repeatedly validated, clinical adoption is slow – much slower than it should be. Physicians are reluctant to adopt technologies that make their years of training obsolete.

. . .

You can pre-order our new book, Surviving Cancer: Hope based on emerging medical science, to be released on Amazon later this week, at the discounted pre-order price of $14.95 here. After publication, the price will be $19.95. You can also pre-order the Kindle version ($6.99) at that same link.

If you or a loved one is dealing with a diagnosis that you would like to learn more about, you can order the Leading Edge report on the latest medical science, customized to that specific diagnosis, here.

Email me at Emerging Cures if you’d like to talk, rod@emergingcures.org.

You can read about my own cancer journey here.

Be in touch if you've received a serious diagnosis and would like to talk.

(843) 284-6850 (Office)

(843) 802-0183 (Mobile)